AI Is Not Your Policy Engine (And That's a Good Thing)

When building a system that uses an large language models (LLMs) to work with sensitive data, you might be tempted to treat the LLM as a decision-maker. They can summarize documents, answer questions, and generate code, so why not let them decide who gets access to what? Because authorization is not a language problem—at least not a natural language problem.

Authorization is about authority: who is allowed to do what, with which data, and under which conditions. That authority must be evaluated deterministically and enforced consistently. Language models, no matter how capable, are not deterministic or consistent. Recognizing this boundary is what allows AI to be useful, rather than dangerous, in systems that handle sensitive data.

The Role of Authorization

Authorization systems exist to answer a narrow but critical question: is this request permitted, and if so, what does that permission allow?In modern systems, this responsibility is usually split across two closely related components.

The policy decision point (PDP) evaluates policies against a specific request and its context, producing a permit or deny decision based on explicit, deterministic policy logic. The policy enforcement point (PEP) enforces that decision by constraining access. It filters data, restricts actions, and exposes only authorized portions of a resource.

Authorization does not generate text, explanations, or instructions. It produces a decision and an enforced scope. Those outputs are constraints, not mere guidance, and they exist independently of any AI system involved downstream. Once they exist, everything that follows can safely assume that access has already been determined.

The Role of the Prompt

This is why access control does not belong in the prompt. You might think it’s OK to encode authorization rules directly into a prompt by including instructions like “only summarize documents the user is allowed to see” or “do not reveal confidential information.” While well intentioned, these instructions confuse guidance with enforcement.

Prompts describe what we want a model to do. They do not—and cannot—guarantee what the model is allowed to do. By the time a prompt is constructed, authorization should already be finished. If access rules appear in the prompt, it usually means enforcement has been pushed too far downstream.

How Authorization and Prompts Work Together

To understand how authorization and prompts fit together in an AI-enabled system, it helps to focus on what each part of the system produces. Authorization answers questions of authority and access, while prompts express intent and shape how a model responds. These concerns are related, but they operate at different points in the system and produce different kinds of outputs. Authorization produces decisions and enforces scope. Prompt construction assumes that scope and uses it to assemble context for the model.

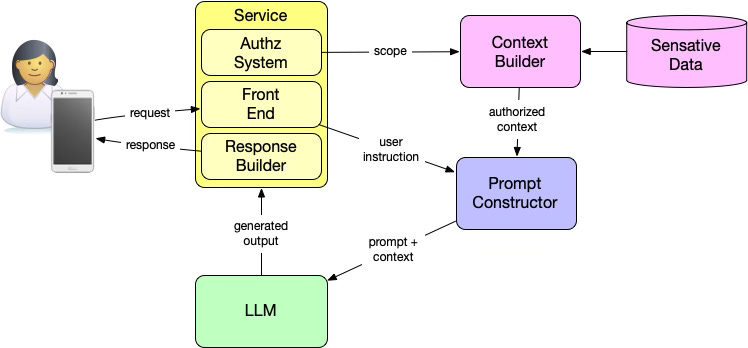

The following diagram shows this relationship conceptually, emphasizing how outputs from one stage become inputs to the next.

A person begins by expressing intent through an application. The service evaluates that request using its authorization system. The PDP produces a decision, and the PEP enforces it by constraining access to data, producing an authorized scope. Only data within that scope is retrieved and assembled as context. The prompt is then constructed from two inputs: the user's intent and the authorized context. The LLM generates a response based solely on what it has been given.

At no point does the model decide what sensitive data it is allowed to use for a response. That question has already been answered and enforced before the prompt ever exists.

Respecting Boundaries

This division of responsibility is essential because of how language models work. Given authorized context, LLMs are extremely effective at summarizing, explaining, and reasoning over that information. What they are not good at—and should not be asked to do—is enforcing access control. They have no intrinsic understanding of obligation, revocation, or consequence. They generate plausible language, not deterministic, authoritative decisions.

Respecting authorization boundaries is a design constraint, not a limitation to work around. When those boundaries are enforced upstream, language models become safer and more useful. When they are blurred, no amount of careful prompting can compensate for the loss of control.

The takeaway is simple. Authorization systems evaluate access and enforce scope. Applications retrieve and assemble authorized context. Prompts express intent, not policy. Language models operate within boundaries they did not define.

Keeping these responsibilities separate is what allows AI to act as a powerful assistant instead of a risk multiplier, and why AI is should never be used as your policy engine.

The PDP/PEP seperation makes so much sense once you frame it this way. I've seen teams try to handle access control through prompt engineering and it always becomes this game of whack-a-mole where edge cases keep emerging. The key insight is that LLMs are great at operating within constraints but terrible at defining them. Separating authorization from prompt construction feels like the right architectural boundary for these sytems.

One could see the value of using My Terms in the context builder and prompt constructor. Creating a real one-two punch on behalf of individuals in the Person AI era, we are just now beginning to better understand and draw conclusions on.